Go to Settings >> Log Sources from the navigation bar and click Add Log Source.

Click Create New and select Universal Rest API.

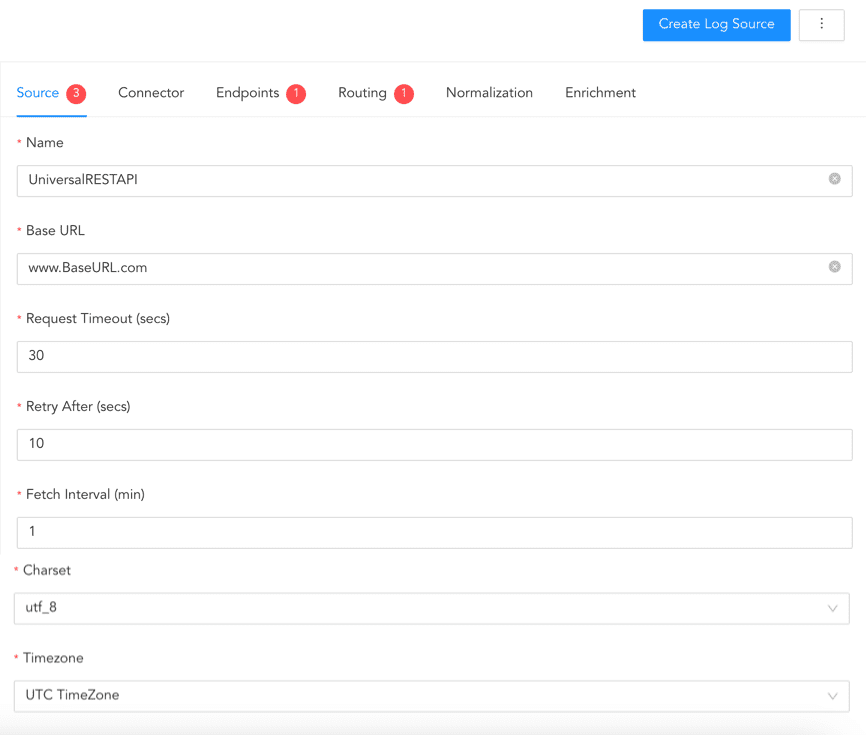

In source, add details about the log source from where the Universal REST API Fetcher fetches logs.

Click Source.

Enter the Log Source’s Name.

In Base URL, enter the RESTful API.

Enter Request Timeout (secs) for the API request.

In Retry After(secs), enter the time to wait after an error or timeout.

Enter the Fetch Interval (min) and select Charset.

Select the Timezone of the log source if its response time is not in UTC. Universal REST API Fetcher automatically sets the time in case of UTC.

Configuring Source¶

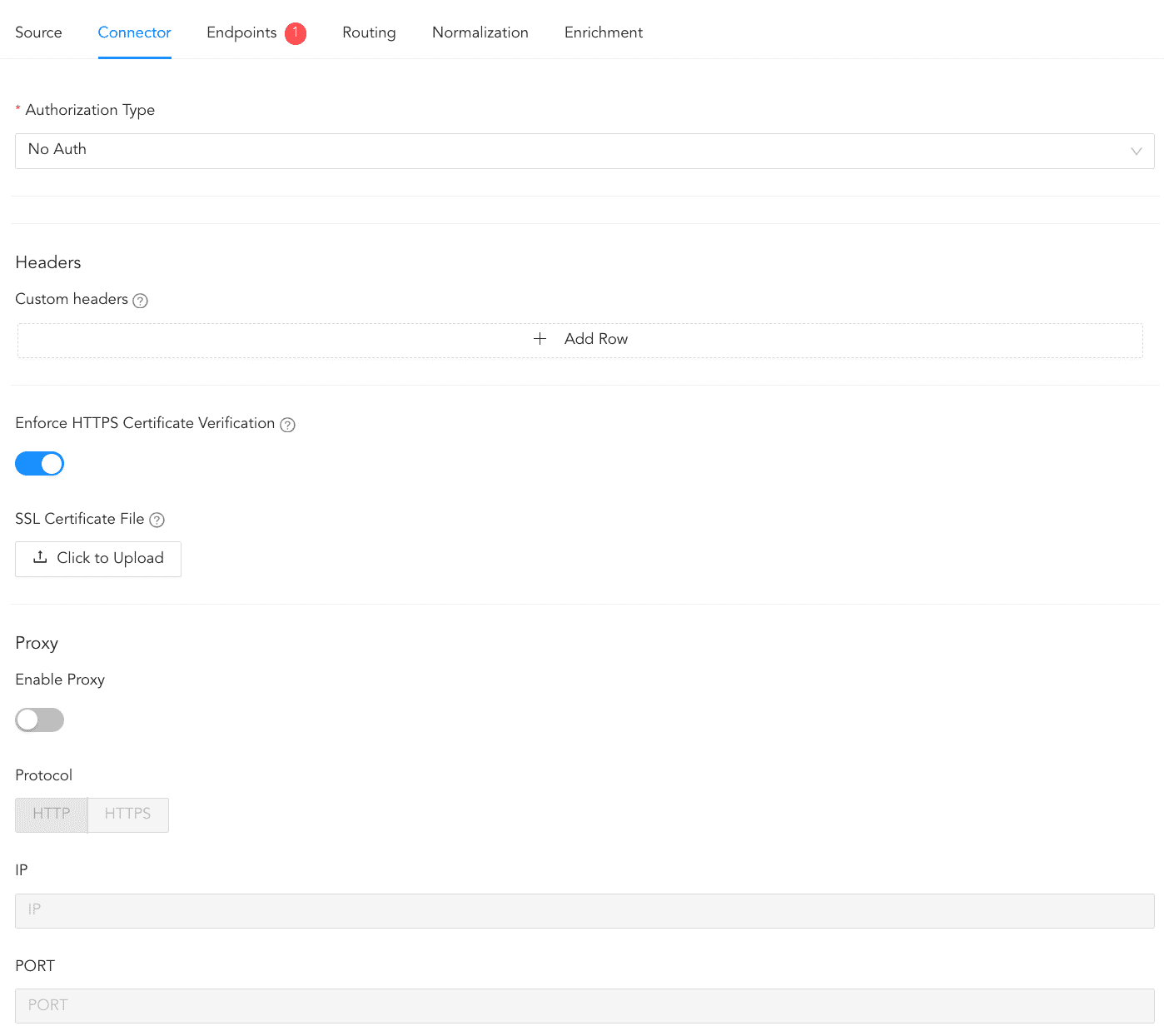

In Connector, you must configure how the Universal REST API Fetcher and the log source communicate with each other.

Click Connector.

Select the Authorization Type. For more information on supported Authorization types in URAF, go here.

2.1. Select No Auth if no authentication is required.

2.2. Select Basic to use a username and password to authenticate.

2.2.1. In Credentials, enter the Username and Password.

2.3. Select OAuth2 to authenticate using OAuth authentication. Enter the following details in OAUTH 2.0 BASIC INFORMATION.

2.3.1. Enter the Token URL of the server.

2.3.2. Select either Client Credentials or Password Credentials as the Grant Type.

2.3.2.1. If you select Client Credentials, enter the OAuth secret password in Client Secret.

2.3.2.2. If you select Password Credentials, enter the OAuth Username and Password.

2.3.3. In Client ID, enter the OAuth application ID or client ID. If the vendor requires a client secret, enter Client Secret.

2.3.4. In API Key Prefix, enter the prefix to add to the authorization header before the API Key or Token.

2.3.5. Select whether to send the client credentials as a basic auth header or in the body.

2.3.6. Enter the extra parameters key and its value in ADDITIONAL BODY FOR OAUTH 2.0.

2.4. Select Token Based to authenticate using an API token.

2.4.1. Enter the Token URL.

2.4.2. In Request Header, click Add Row and enter the Key and Value of the request header used to send the access token.

2.4.3. In Request Body, click Add Row and enter the Key and Value used to send the credentials to obtain the access token.

2.4.4. Enter the access token location in Access Token Response Path. If the token is nested under a

datakey, usedata.access_token. In Access Token Expiry (min), enter the number of minutes to set an expiry period for the API token.2.4.5. In Access Token Field Name, enter the name of the field used to send the access token.

2.5. Select the API Key to authenticate using an API Key.

2.5.1. In Secret Key, enter API Key. This API key is used in the authorization header.

2.5.2. In API Key Prefix, enter the prefix to add to the authorization header before API Key or Token. This is optional.

2.6. Select Digest to authenticate using digest access authentication.

2.6.1. In Credentials, enter the Username and Password.

2.7. Select Custom to authenticate using integration that applies custom authentication mechanisms and request handling.

2.7.1. Select a Product. Here, you can see the integrations supported by Universal REST API Fetcher, such as Duo Security and Cybereason, that require custom authentication mechanisms and request handling. They must also be installed on Logpoint.

Enter the RESTful API custom headers in Key and Value.

Enable Enforce HTTPS certificate verification to enable a secure connection.

If the server has a Self Signed Certificate, you can add an SSL Certificate File. Select Click to Upload. Select a certificate file in PEM format (.pem) or PEM-encoded .crt format. An SSL certificate enables an encrypted connection between the server and Logpoint.

Enable Proxy to use a proxy server.

5.1. Select either HTTP or HTTPS protocol.

5.2. Enter the proxy server IP address and the PORT number.

Configuring Connector¶

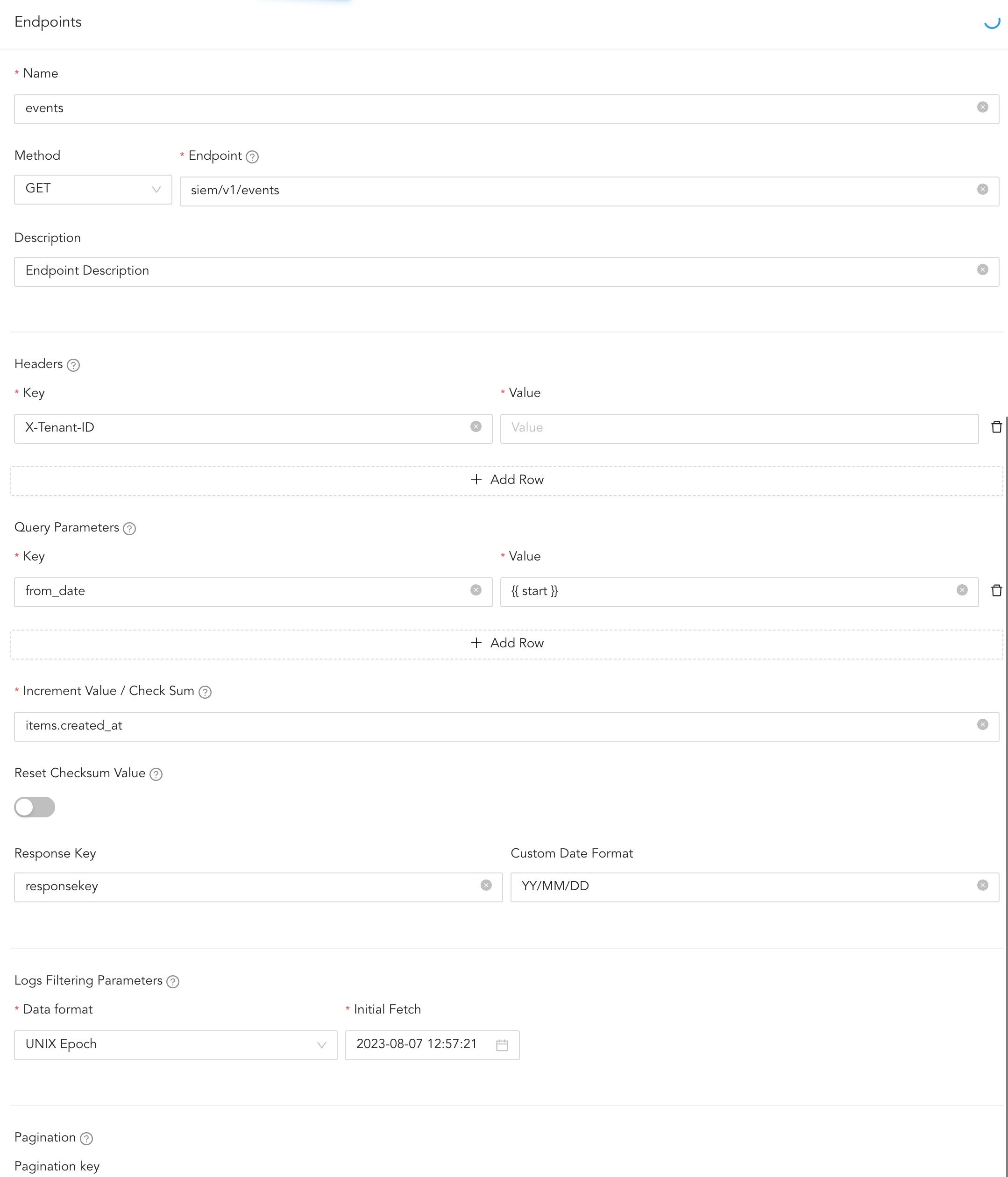

In enpoints, configure details about the log source endpoints.

Click Endpoints and Add Row.

Enter the endpoint’s Name.

Select the HTTP request Method.

3.1. If you select GET, continue to Step 4.

3.2. If you select POST, enter the Post request body in JSON format.

You can define the time range for fetching logs in the Post request body:

Use the Jinja keyword

{{start}}for beginning of the log fetching window.Use

{{end}}for endpoint of the time range.Example:

{ "filters": [ { "fieldName": "<field>", "operator": "<operator>", "values": "[value]" } ], "search": "<value>", "sortingFieldName": "<field>", "sortDirection": "<sort direction>", "limit": "<limit>", "offset": "<page number>" }Important

On the first request,

{{start}}is replaced with the Initial Fetch value set in the endpoint. In later requests, it is replaced with the check sum value. The{{end}}value is always replaced with the timestamp when the request is sent.Example:

{ "filters": [ { "fieldName": "StartTimestamp", "operator": "equals", "values": "{{start}}" }, { "fieldName": "EndTimestamp", "operator": "equals", "values": "{{end}}" } ] }In this example, StartTimestamp and EndTimestamp are the beginning and end of the fetch window.

Enter the Endpoint part of the previously added Base URL .

Enter a Description for the endpoint.

Under Headers, click + Add Row. Enter the Key and Value for each custom header.

Note

Avoid using common log filtering fields like start_date or end_date as headers.

Do not add Authorization as a custom header.

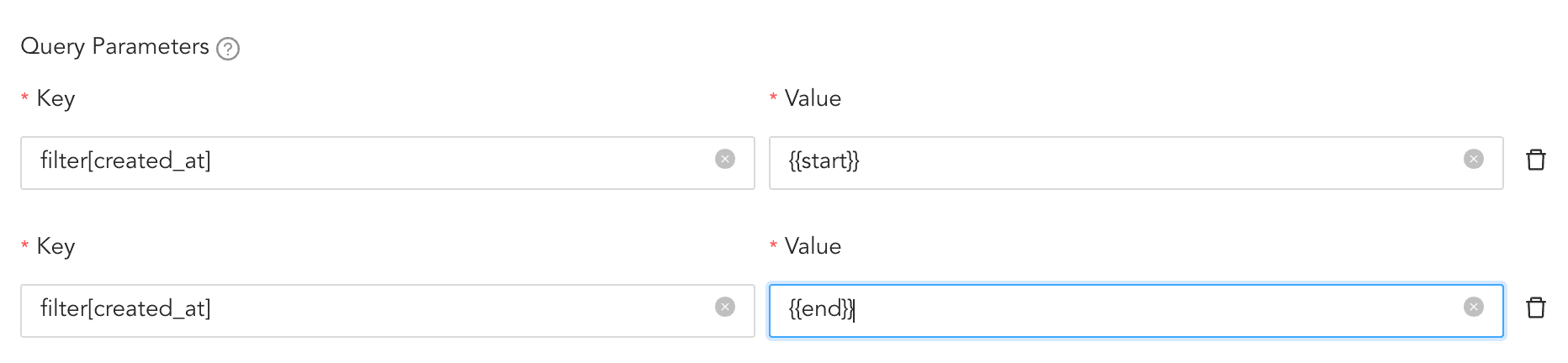

In Query Parameters, click + Add Row.

7.1. Enter the Key and Value as required by the API.

Example:

For a query like /api/alerts?$filter=(severity eq ‘High’) or (severity eq ‘Medium’), enter:

Key: $filter

Value: (severity eq ‘High’) or (severity eq ‘Medium’)

Query parameters are sent as part of the request URL.

Important

To define a time range using query parameters:

Use

{{start}}for the start timestamp.Use

{{end}}for the end timestamp.Example:

Key

Value

StartTimestamp

{{start}}

EndTimestamp

{{end}}You can also send multiple query parameters with the same key.

Example:

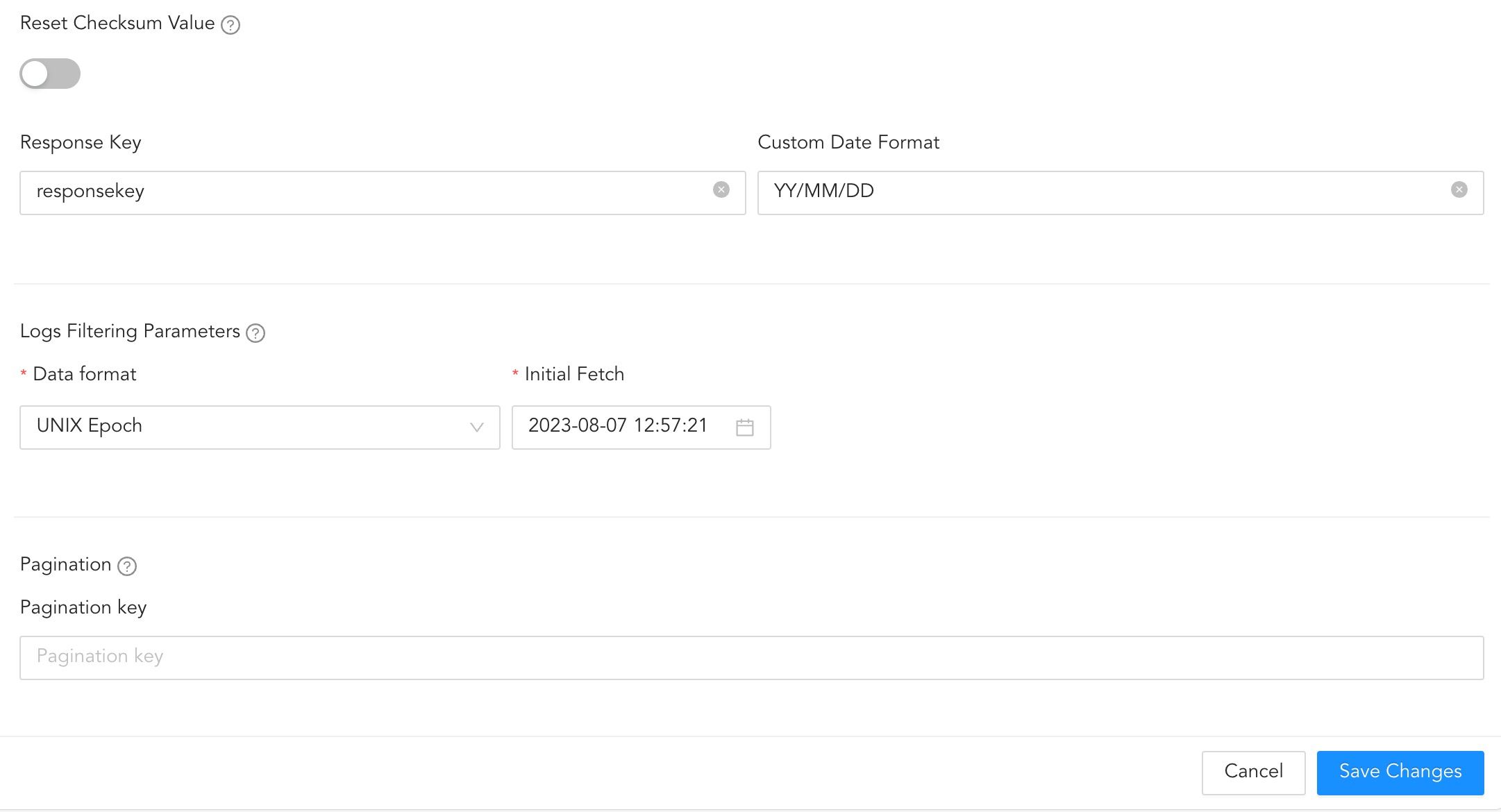

In Increment Value / Check Sum, enter the path to the field that tracks progress in log fetching.

Example:

If the field is event_date within an Events object, enter Events.event_date. This field’s value from the most recent log is stored in the CheckSum database. During the next collection cycle, it becomes the {{start}} value, ensuring no duplicate logs are collected.

Enter the Response Key. This is used to locate and extract log records from the API response.

Enter the Custom Date Format expected in the API response.

Some of them are:

Date Type |

Format |

Example |

|---|---|---|

UTC |

%Y-%m-%dT%H:%M:%SZ |

2023-04-27T07:18:52Z |

ISO-8601 |

%Y-%m-%dT%H:%M:%S%z |

2023-04-27T07:18:52+0000 |

RFC 2822 |

%a, %d %b %Y %H:%M:%S %z |

Thu, 27 Apr 2023 07:18:52 +0000 |

RFC 850 |

%A, %d-%b-%y %H:%M:%S UTC |

Thursday, 27-Apr-23 07:18:52 UTC |

RFC 1036 |

%a, %d %b %y %H:%M:%S %z |

Thu, 27 Apr 23 07:18:52 +0000 |

RFC 1123 |

%a, %d %b %Y %H:%M:%S %z |

Thu, 27 Apr 2023 07:18:52 +0000 |

RFC 822 |

%a, %d %b %y %H:%M:%S %z |

Thu, 27 Apr 23 07:18:52 +0000 |

RFC 3339 |

%Y-%m-%dT%H:%M:%S%z |

2023-04-27T07:18:52+00:00 |

ATOM |

%Y-%m-%dT%H:%M:%S%z |

2023-04-27T07:18:52+00:00 |

COOKIE |

%A, %d-%b-%Y %H:%M:%S UTC |

Thursday, 27-Apr-2023 07:18:52 UTC |

RSS |

%a, %d %b %Y %H:%M:%S %z |

Thu, 27 Apr 2023 07:18:52 +0000 |

W3C |

%Y-%m-%dT%H:%M:%S%z |

2023-04-27T07:18:52+00:00 |

YYYY-DD-MM HH:MM:SS |

%Y-%d-%m %H:%M:%S |

2023-27-04 07:18:52 |

YYYY-DD-MM HH:MM:SS am/pm |

%Y-%d-%m %I:%M:%S %p |

2023-27-04 07:18:52 AM |

DD-MM-YYYY HH:MM:SS |

%d-%m-%Y %H:%M:%S |

27-04-2023 07:18:52 |

MM-DD-YYYY HH:MM:SS |

%m-%d-%Y %H:%M:%S |

04-27-2023 07:18:52 |

In Logs Filtering Parameters, select the parameters to filter the incoming logs.

11.1. Select a Data format.

11.1.1. Select ISO Date to represent data using the International Standards Organization (ISO) format of “yyyy-MM-dd”. Example: 2017-06-10.

Note

If you select ISO Date, then its value must be in the string format in the Post request body.

11.1.2. Select UNIX Epoch to represent data using the UNIX epoch time format. It is a system for measuring time as the number of seconds that have elapsed since January 1, 1970, at 00:00:00 UTC (Coordinated Universal Time). Example: 1672475384.

11.1.3. Select UNIX Epoch (ms) to represent data using the UNIX epoch time format with milliseconds precision. It is a system for measuring time as the number of milliseconds that have elapsed since January 1, 1970, at 00:00:00 UTC (Coordinated Universal Time). Example:1672475384000.

11.1.4. Select Custom Format to define your own format for representing the data. The custom format can be created using Date/Time patterns.

11.1.5. Select Unique ID to represent data using a unique ID.

Note

If you select Unique ID here, then its value must be in the number format in the Post request body.

Select an Initial Fetch date. Logs are fetched for the first time from this date.

For Link-based pagination, in Pagination Key, enter the URL or URI from the API response that points to the next page of results. This value can come from response body, response header links, or response headers.

However, the pagination key could also originate from other fields in the API response depending on how the API implements pagination.

For page-based, offset-based and cursor-based paginations, users only have to configure Key and Value in Query Parameters.

Key: The name of the query parameter used by the API to indicate the position or page of results to fetch. This could represent a page number, an offset, or a cursor, depending on the pagination type.

Value: The dynamic value for the query parameter that determines the next set of results. It is usually derived from the API response and can be a page number, an offset count, or a cursor pointing to the next item in the dataset.

Click Save Changes.

Configuring Endpoint¶

To edit the endpoint configuration, click the ( ) icon under Action and click Edit. Make the necessary changes and click Save Changes.

) icon under Action and click Edit. Make the necessary changes and click Save Changes.

To delete the endpoint configuration, click the ( ) icon under Action and click Delete.

) icon under Action and click Delete.

To reset the Checksum values, toggle Reset.

Reseting Checksum¶

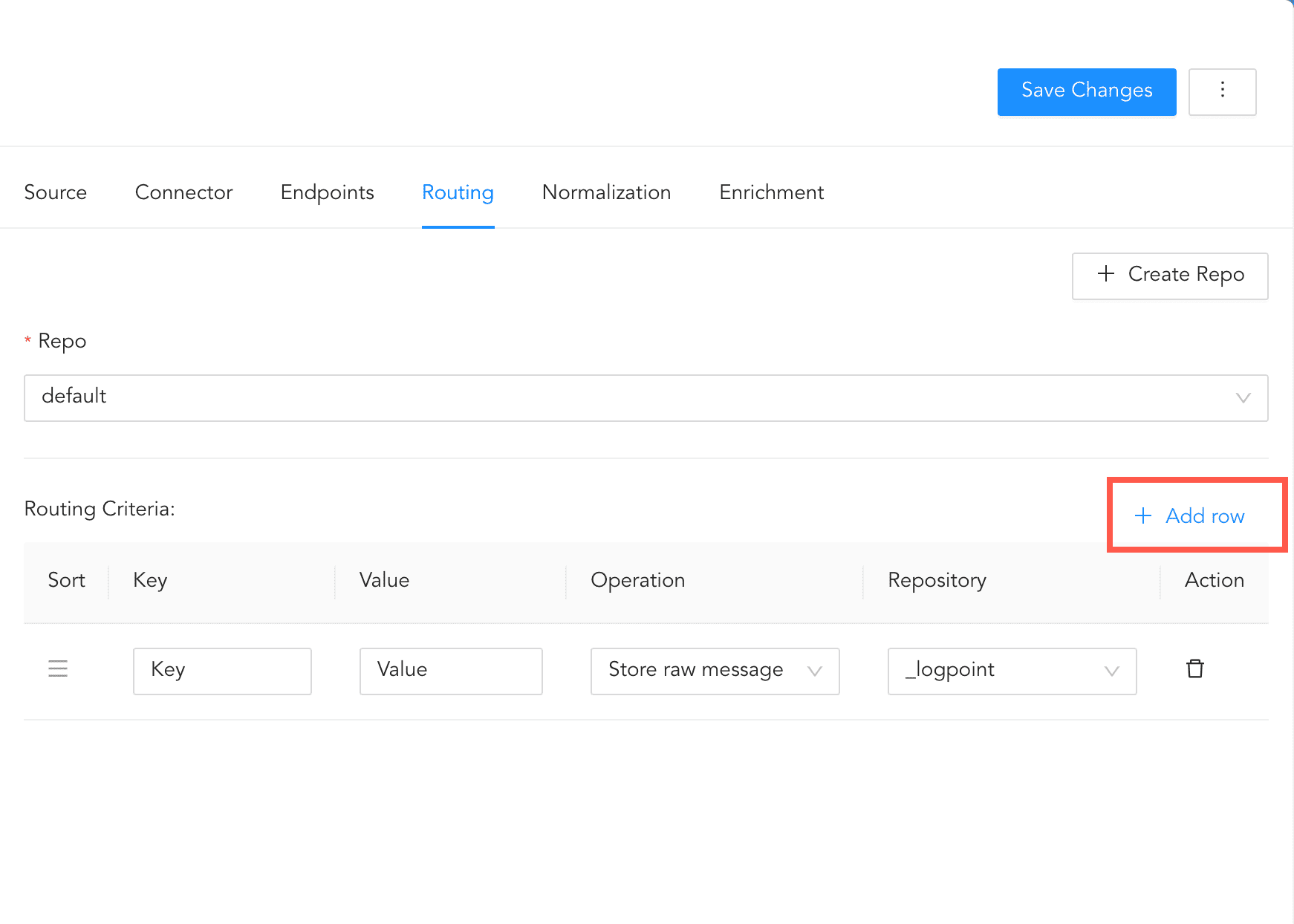

Routing lets you create repos and routing criteria for URAF. Repos store incoming logs and routing criteria determines where the logs are sent.

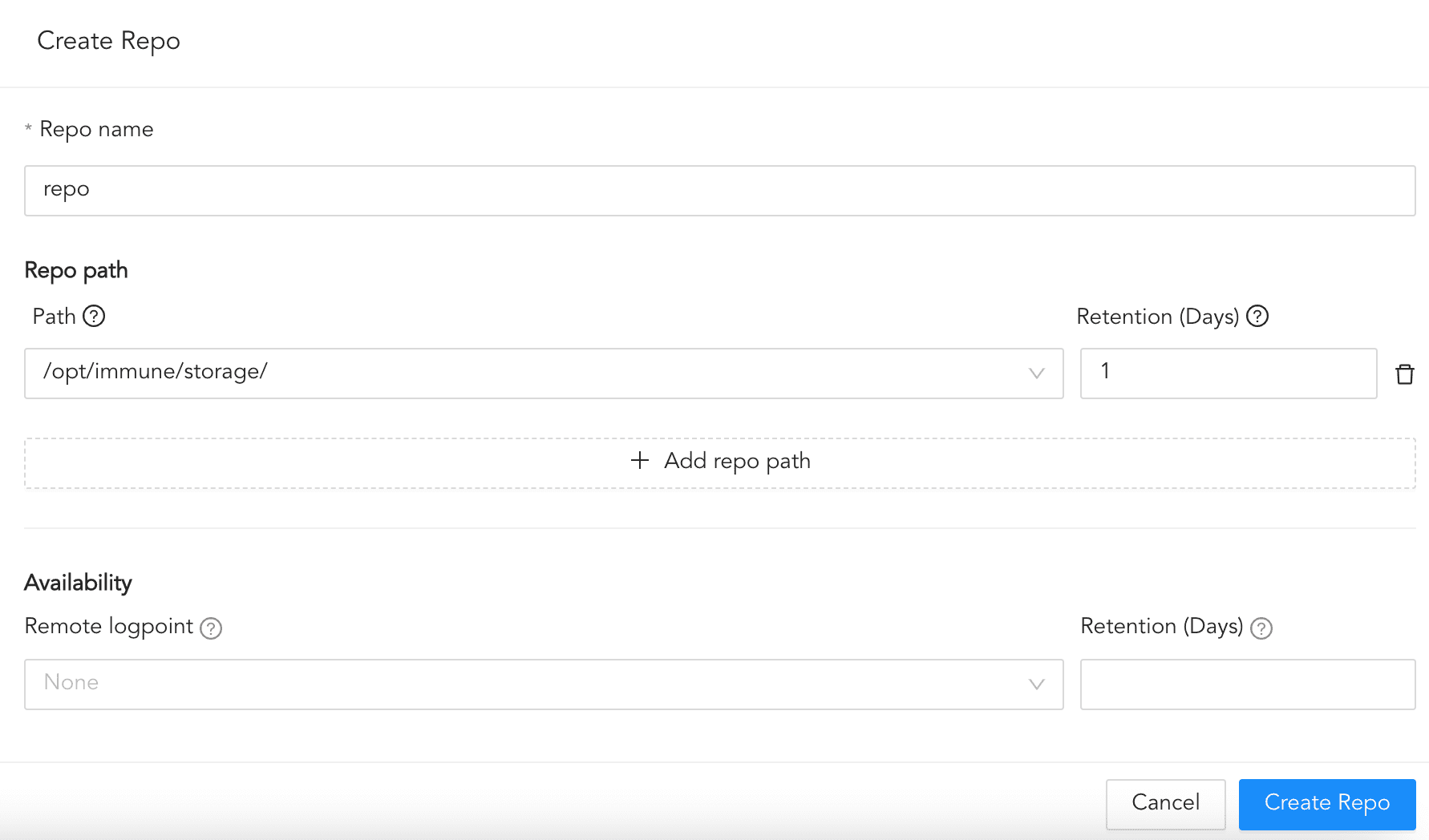

To create a repo:

Click Routing and + Create Repo.

Enter a Repo name.

In Path, enter the location to store incoming logs.

In Retention (Days), enter the number of days logs are kept in a repository before they are automatically deleted.

In Availability, select the Remote logpoint and Retention (Days).

Click Create Repo.

Creating a Repo¶

In Repo, select the created repo to store logs.

To create Routing Criteria:

Click + Add row.

Enter a Key and Value. The routing criteria is only applied to those logs which have this key-value pair.

Select an Operation for logs that have this key-value pair.

3.1. Select Store raw message to store both the incoming and the normalized logs in the selected repo.

3.2. Select Discard raw message to discard the incoming logs and store the normalized ones.

3.3. Select Discard entire event to discard both the incoming and the normalized logs.

In Repository, select a repo to store logs.

Creating a Routing Criteria¶

Click the ( ) icon under Action to delete the created routing criteria.

) icon under Action to delete the created routing criteria.

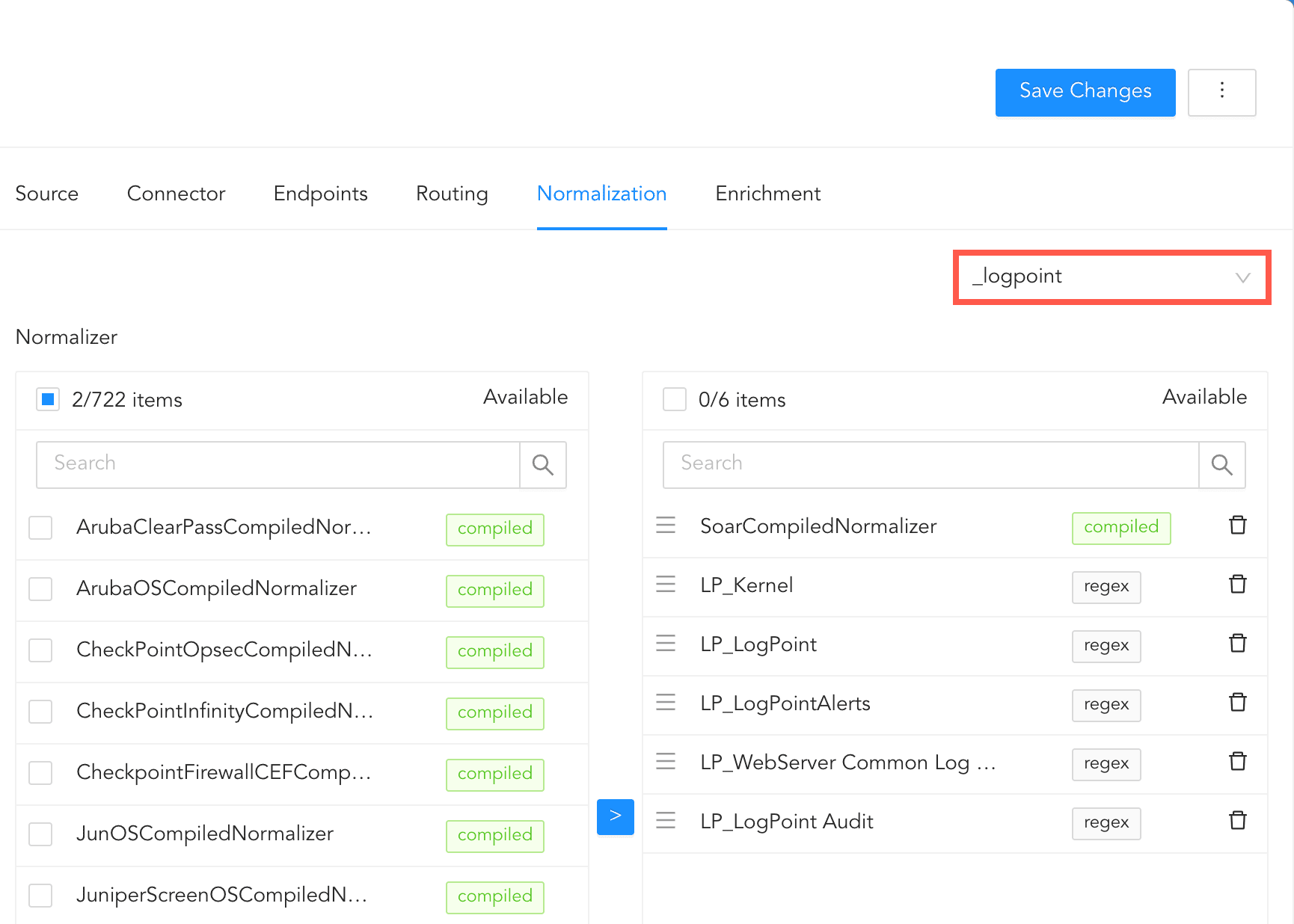

In normalization, you must select normalizers for the incoming logs. Normalizers transform incoming logs into a standardized format for consistent and efficient analysis.

Click Normalization.

You can either select a previously created normalization policy from the Select Normalization Policy dropdown or select a Normalizer from the list and click the swap( ) icon.

) icon.

Adding Normalizers¶

In enrichment, select an enrichment policy for the incoming logs. Enrichment adds details like user information or geolocation to logs before analysis.

Click Enrichment.

Select an Enrichment Policy.

Click Create Log Source to save the configurations of Source, Connector, Endpoints, Routing, Normalization and Enrichment.

We are glad this guide helped.

Please don't include any personal information in your comment

Contact Support